Chuanxiang Bi

Faculty of Engineering and Technology, Panyapiwat Institute of Management, Nonthaburi, Thailand

Jian Qu

Faculty of Engineering and Technology, Panyapiwat Institute of Management, Nonthaburi, Thailand

DOI: https://doi.org/10.14456/apst.2025.62

Keywords: Deep neural networks Copy-Paste Attack Adversarial attacks ResNet26-CBAM Adversarial trained

Abstract

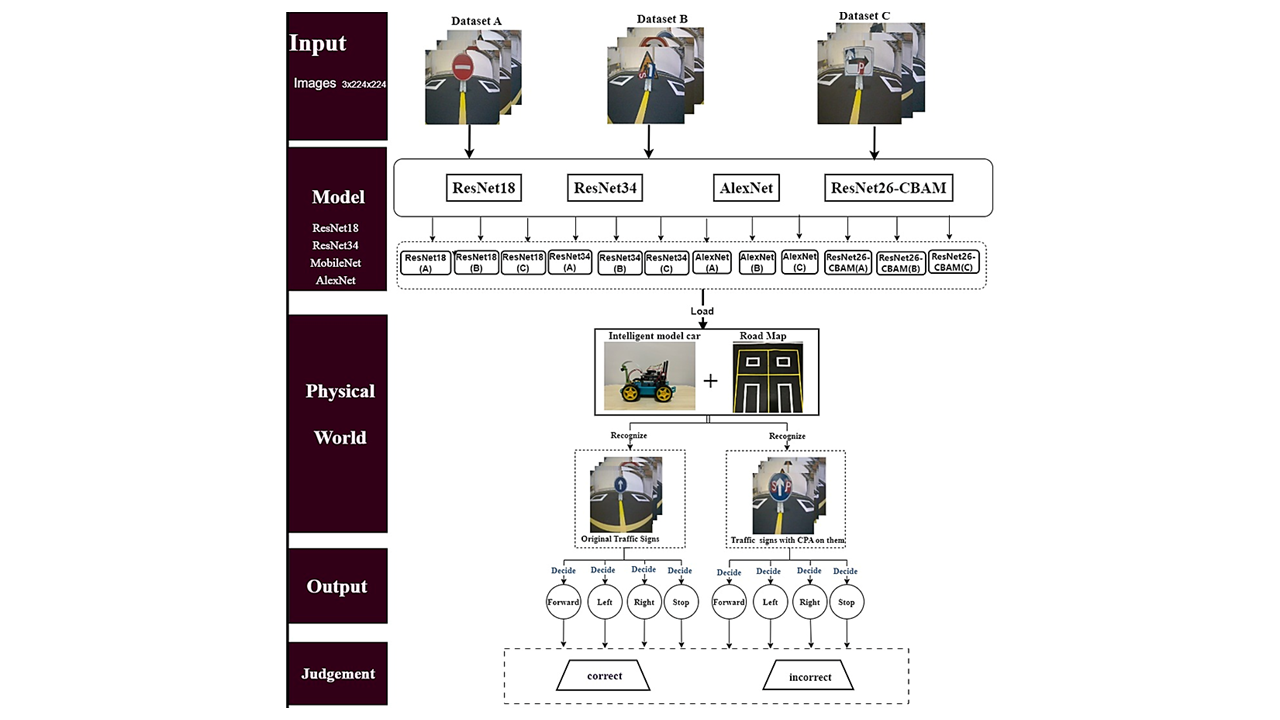

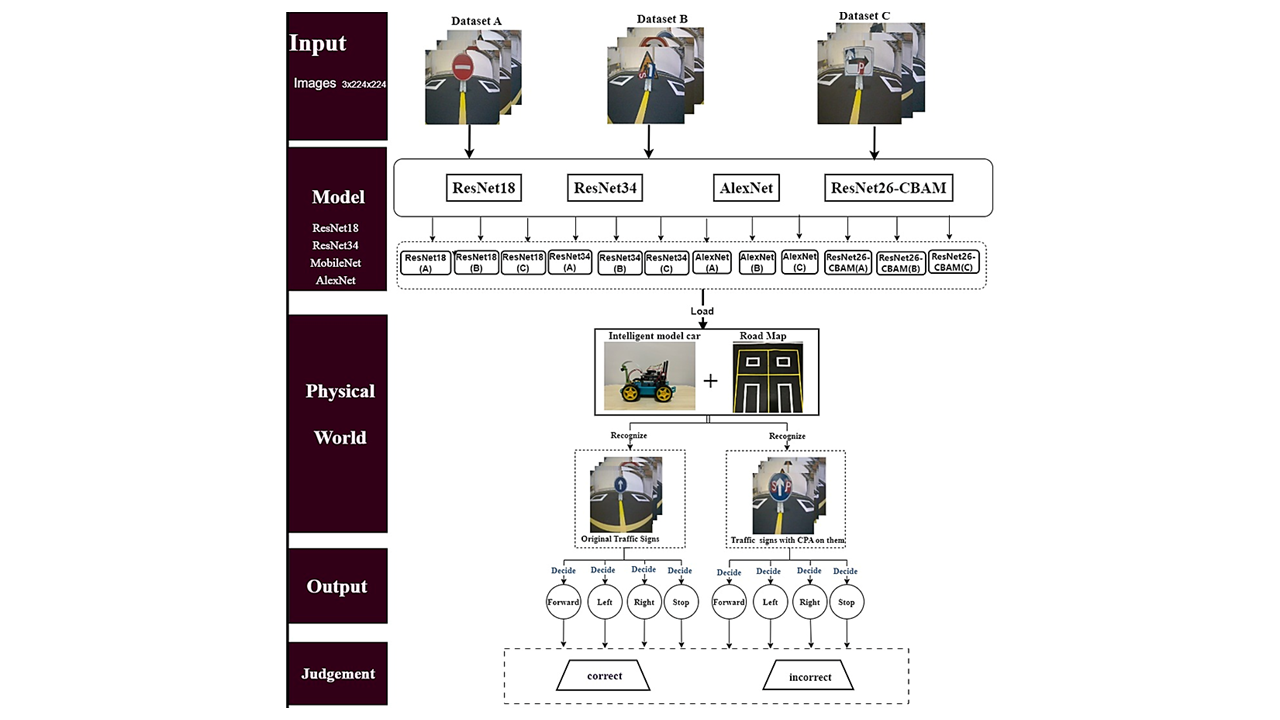

Deep neural networks are susceptible to adversarial attacks, which ranging from unseen perturbations to tiny inconspicuous attacks that can cause deep neural networks (DNN) to output errors. Although many adversarial attack methods have been proposed, most methods cannot be easily applied in the physical (real) world due to their use of over-detailed images; such images could not be printed on a normal scale. In this paper, we propose a novel method of physical adversarial attack, the Copy-Paste Attack, by copying other image pattern elements to make stickers and pasting them on the attack target. This attack can be printed out and applied in the physical world. Moreover, this attack reduces the recognition accuracy of deep neural network by making the model misclassify the traffic signs as the attack pattern. We conducted our experiment with a model intelligent car in a physical world. We tested three well-known DNN models, on three different kinds of Datasets. The experimental results demonstrate that our proposed collaborative performance advertising solution (CPAs) greatly interferes with the recognition rate of traffic signs. Moreover, our CPAs outperform the existing method PR2. Furthermore, we tested one of our previous ResNet26-carbon border adjustment mechanism (CBAM) models, although it exhibits higher robustness against the CPA attack compared with other well-known CNN models, our ResNet26-CBAM also got misguided by CPAs with an accuracy of 60%. In addition, we trained the CNN models with the physical defense method of adversarial training; however, it had little effect on our CPA attacks.

How to Cite

Bi, C., & Qu, J. (2025). Copy-paste attacks: Targeted physical world attacks on self-driving . Asia-Pacific Journal of Science and Technology, 30(04), APST–30. https://doi.org/10.14456/apst.2025.62

References

Aldahdooh A, Hamidouche W, Fezza SA, Déforges O. Adversarial example detection for DNN models: A review and experimental comparison. Artif Intell Rev. 2022;55(6):4403–62.

Ni J, Chen Y, Chen Y, Zhu J, Ali D, Cao W. A survey on theories and applications for self-driving cars based on deep learning methods. Appl Sci. 2020;10(8):2749.

Li YW, Qu J. Intelligent road tracking and real-time acceleration-deceleration for autonomous driving using modified convolutional neural networks. Curr Appl Sci Technol. 2022 ID 10-55003.

Ding S, Qu J. Research on multi-tasking smart car based on autonomous driving systems. SN Comput Sci. 2023;4(3):292.

Du M. Mobile payment recognition technology based on face detection algorithm. Concurr Comput Pract Exp. 2018;30(22): e4655.

Hinton G, Deng L, Yu D, Dahl GE, Mohamed AR, Jaitly N, et al. Deep neural networks for acoustic modeling in speech recognition: The shared views of four research groups. IEEE Signal Process Mag. 2012;29(6):82–97.

Carlini N, Wagner D. Towards evaluating the robustness of neural networks. In: Proc IEEE Symp Secur Privacy. 2017. p. 39–57.

Kos J, Fischer I, Song D. Adversarial examples for generative models. In: Proc IEEE Secur Privacy Workshops. 2018. p. 36–42.

Finlayson SG, Bowers JD, Ito J, Zittrain JL, Beam AL, Kohane IS. Adversarial attacks on medical machine learning. Science. 2019;363(6433):1287–1289.

Dong Y, Liao F, Pang T, Su H, Zhu J, Hu X, et al. Boosting adversarial attacks with momentum. In: Proc IEEE Conf Comput Vis Pattern Recognit (CVPR). 2018. p. 9185–9193.

Moosavi-Dezfooli SM, Fawzi A, Frossard P. DeepFool: A simple and accurate method to fool deep neural networks. In: Proc IEEE Conf Comput Vis Pattern Recognit (CVPR). 2016. p. 2574–2582.

Eykholt K, Evtimov I, Fernandes E, Li B, Rahmati A, Xiao C, et al. Robust physical-world attacks on deep learning visual classification. In: Proc IEEE Conf Comput Vis Pattern Recognit (CVPR). 2018. p. 1625–1634.

Duan R, Ma X, Wang Y, Bailey J, Qin AK, Yang Y. Adversarial camouflage: Hiding physical-world attacks with natural styles. In: Proc IEEE/CVF Conf Comput Vis Pattern Recognit (CVPR). 2020. p. 1000–1008.

Kurakin A, Goodfellow IJ, Bengio S. Adversarial examples in the physical world. In: Artif Intell Saf Secur. 2018. p. 99–112.

Chen ST, Cornelius C, Martin J, Chau DH. Shapeshifter: Robust physical adversarial attack on Faster R-CNN object detector. In: Proc Eur Conf Comput Vis (ECCV) Workshops. 2018. p. 10–14.

Akhtar N, Mian A. Threat of adversarial attacks on deep learning in computer vision: A survey. IEEE Access. 2018; 6:14410–14430.

Bi C, Shi S, Qu J. Enhancing autonomous driving: A novel approach of mixed attack and physical defense strategies. ASEAN J Sci Technol Rep. 2025;28(1): e254093.

Evtimov I, Eykholt K, Fernandes E, Kohno T, Li B, Prakash A, et al. Robust physical-world attacks on machine learning models. arXiv. 2017; arXiv:1707.08945.

Akhtar N, Mian A, Kardan N, Shah M. Advances in adversarial attacks and defenses in computer vision: A survey. IEEE Access. 2021; 9:155161–155196.

Published:

License

This work is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License.